So Twitter has finally given Milo Yiannopoulos the boot — apparently for good — after the Breitbart “journalist” gleefully participated in, and egged on, a vicious campaign of racist abuse directed at Ghostbusters star Leslie Jones on Twitter earlier this week.

This wasn’t the first time that Milo, formerly known as @Nero, used his Twitter platform — at the time of his suspension he had 338,000 followers — to attack and abuse a popular scapegoat (or someone who merely mocked him online). It wasn’t even the worst example of his bullying.

What made the difference this time? Leslie Jones, who has a bit of a Twitter following herself, refused to stay silent in the face of the abuse she was getting, a move that no doubt increased the amount of harassment sent her way, but one that also caught the attention of the media. And so Milo finally got the ban he has so long deserved.

But what about all those others who participated in the abuse? And the rest of those who’ve turned the Twitter platform into one of the Internet’s most effective enablers of bullying and abuse?

In a statement, Twitter said it was reacting to “an uptick in the number of accounts violating [Twitter’s] policies” on abuse. But as the folks who run Twitter know all too well, the campaign against Jones, as utterly vicious as it was, wasn’t some kind of weird aberration.

It’s the sort of thing that happens every single day on Twitter to countless non-famous people — with women, and people of color, and LGBT folks, and Jews, and Muslims (basically anyone who is not a cis, white, straight, non-Jewish, non-Muslim man) being favorite targets.

Twitter also says that it will try to do better when it comes to abuse. “We know many people believe we have not done enough to curb this type of behavior on Twitter,” the company said in its statement.

We agree. We are continuing to invest heavily in improving our tools and enforcement systems to better allow us to identify and take faster action on abuse as it’s happening and prevent repeat offenders. We have been in the process of reviewing our hateful conduct policy to prohibit additional types of abusive behavior and allow more types of reporting, with the goal of reducing the burden on the person being targeted. We’ll provide more details on those changes in the coming weeks.

This is good news. At least if it’s something more than hot air. Twitter desperately needs better policies to deal with abuse. But better policies won’t mean much if they’re not enforced. Twitter already has rules that, if enforced, would go a long way towards dealing with the abuse on the platform. But they’re simply not enforced.

Right now I don’t even bother reporting Tweets like this, because Twitter typically does nothing about them.

https://twitter.com/Bobcat665/status/735282887965085697

And even when someone does get booted off Twitter for abuse, they often return under a new name — and though this is in direct violation of Twitter’s rules, the ban evaders are so seldom punished for this violation that most don’t even bother to pretend to be anyone other than they are.

Longtime readers here will remember the saga of @JudgyBitch1 and her adventures in ban evasion.

Meanwhile, babyfaced white supremacist Matt Forney’s original account (@realMattForney) was banned some time ago; he returned as @basedMattForney. When this ban evading account was also banned, he got around this ban by starting up yet another ban evading account, under the name @oneMattForney, and did his best to round up as many of his old followers as possible.

https://twitter.com/onemattforney/status/753087810006085634

A few days later, Twitter unbanned his @basedMattForney account.

And here’s yet another banned Twitterer boasting about their success in ban evasion from a new account:

https://twitter.com/_AltRight_Anew/status/755643864036339716

And then there are all the accounts set up for no other reason than to abuse people. Like this person, who set up a new account just so they could post a single rude Tweet to me:

In case you’re wondering, the one person this Twitter account follows is, yes Donald Trump.

And then there’s this guy, also with an egg avatar, and a whopping three followers, who has spewed forth hundreds of nasty tweets directed mostly at feminists.

Here are several he sent to me, which I’ve lightly censored:

And some he’s sent to others.

So, yeah. Twitter is rotten with accounts like these, set up to do little more than harass. And if they ever get banned, it only takes a few minutes to set up another one.

Milo used his vast number of Twitter followers as a personal army. But you don’t need a lot of followers to do a lot of damage on Twitter. All you really need is an email address and a willingness to do harm.

It’s good that Twitter took down one of the platforms most vicious ringleaders of abuse. But unless Twitter can deal with the small-time goons, with their anime avatars and egg accounts, as well, it will remain one of the Internet’s most effective tools for harassment and abuse.

@Gert,

When you connect to the internet, your internet provider assigns you an IP address, like, 92.171.34.8 or something like that. All machines on the internet have an IP address, which is a (sort of) unique identifier. It’s your postal code. These can be dynamic – when you disconnect from your internet provider, and reconnect, the IP address they assign you when you reconnect may not be the same as the one you had before.

(There’s also complex gobbledygook when you start including local networks and VPNs and routers and whatnot, but that’s not important.)

MAC addresses, on the other hand, are permanent identifiers assigned to your machine directly – specifically, your ethernet card (or its equivalent). They’re permanent, written into the static memory of your machine (and often physically on a tag on the outside of the machine, so you can read it), and unique. They’re used by switches and routers to identify which port to send messages to.

Banning by IP address can be circumvented a few ways. If your IP is assigned dynamically (AOL, Yahoo and Compuserve used to do this, as did most of the old dial-ups) you just reconnect and you have a new IP. Alternatively, there are servers out there that act as anonymizers – they let you “mask” your IP with one they assign dynamically, so even if you have a static IP you can just pass your messages through that service and you have as many free IPs as you want.

Banning by MAC address would mean that you’re banning a specific physical device. You ban a phone, a laptop, a server port. Those can’t be reassigned dynamically, they’re a permanent identifier for that device. You have to go and physically buy and install a new device to start a new egg. Still far from perfect, but it’s interesting to consider whether it would be an improvement!

Thinking more about implementing a MAC ban, it’d be a good way for consequences to enter real life. like @Pendraeg said, families would get banned, public services from libraries would get banned, etc. It’d have a lot of splash-back onto innocent people.

This is bad, but this is also how consequences for bad behaviour come about. Get mom’s laptop banned for sass-talkin’ on the twitters? That’s a surefire way to get mom to be more concerned about what her child is doing on the internet. Library computer gets banned? That’s how you lose your library card and get banned from there.

All of a sudden, this jerk behaviour online starts generating real life consequences. I’m warming up to the concept.

@Scildfreja:

Thanks (I think I actually understood that) 😉

@ scildfreja

Some of those consequences are verging on collective punishment; which might be controversial.

But I do agree that a big problem with, and perhaps the reason for, Internet vileness is that there are no real world consequences. Even the old social control methods of stigmatisation and shame don’t seem to work when people can be anonymous and nobody seems to feel shame anymore. In a real word community being shunned can have an impact, but in a virtual community you’ll always find fellow travellers to support you.

So far though the only thing I can think of is to bring back duelling; which might not be everybody’s cup of tea of course.

@ scildfreja

Some of those consequences are verging on collective punishment; which might be controversial.

But I do agree that a big problem with, and perhaps the reason for, Internet vileness is that there are no real world consequences. Even the old social control methods of stigmatisation and shame don’t seem to work when people can be anonymous and nobody seems to feel shame anymore. In a real word community being shunned can have an impact, but in a virtual community you’ll always find fellow travellers to support you.

So far though the only thing I can think of is to bring back duelling; which might not be everybody’s cup of tea of course.

I’m pretty certain that with some devices you can set a manual mac address (overriding the one hard coded in the ethernet interface). In fact I’ve just checked, and I can do this on my router – so a mac ban isn’t going to work that well either.

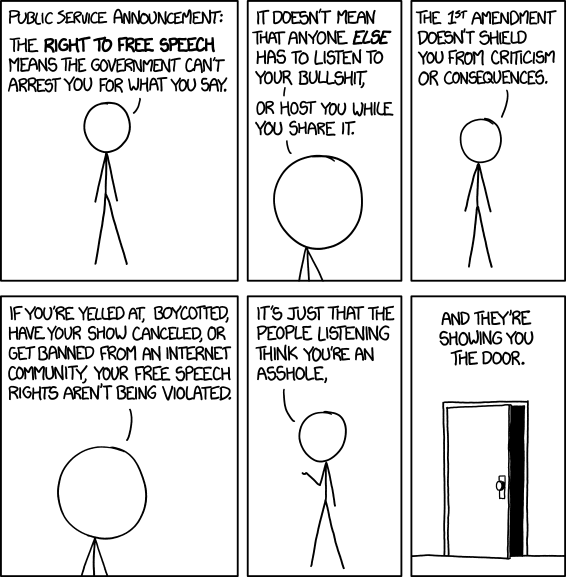

Anyway, I think it is time for this – which I might just also tweet to #freeMilo

What about having to give Twitter a piece of identifying information to get an account. Like a credit/debit card, bank account number or a tax id if it’s a business account? That would make it harder to sock because a person is only going to have so many valid cards to use. It would also mean that if a person threatens violence, the person can report it to law enforcement and law enforcement can get a warrant to obtain that identifying info from Twitter. It might seem invasive, but it might be the only way to curb the worst of it. I’m kind of starting to think that internet anonymity is a failed experiment.

@Scildfreja But then that can have more consequences than just some kid getting yelled at by the folks. What if mom or dad uses Twitter as art of their job?

It wouldn’t be a common occurrence but could be really ugly for some people. Maybe some sort of cross reference of IP and MAC, or making Twitter work with a primary and then child accounts could work with that, but that’d be a major software rework.

I think in the future we’ll have secure internet ID’s which will always tie mainstream online activity to an individual.

Anonymous access will have to be permitted somehow, for those at risk, but with additional hurdles to prevent abusive behaviour, and the option for any individual to block all anonymous messaging.

The dilemma with Twitter is that, in many countries especially those with repressive regimes, it’s the only free form of communication.

How would the Arab Spring have fared if the organisers had had to worry about their identities being known to the secret police? Often times it’s the only way of getting a message out to the outside world when there’s some dodgy event occurring internally. If I lived in China or KSA for instance I’m not sure I’d use Twitter when even following someone controversial or disapproved of could have serious consequences.

This of course does put Milo’s claims of persecution into perspective; but how do we strike a balance between preventing abuse and actual state censorship (in the proper sense of the word)?

I can’t pretend to know the answer.

@Alan Robertshaw That’s a good point too, the whole thing is really a quagmire. I’m sure eventually we’ll find solid solutions but getting there sure does suck.

Reason number eleven billion trillion why rape victims have reason to distrust the system

http://jezebel.com/a-rape-victim-was-thrown-in-jail-for-a-month-after-brea-1783976169

To think people still don’t believe that rape culture exists.

Yeah the problem of individual security in totalitarian states does come into play when trying to find ways for people to be accountable for their actions.

Now a compromise I would suggest is a country by country basis, but then that might spark some higher powers to start exerting control of twitter.

At this point the overall fix-it I can find, is having a dedicated team that are bit of a stickler for the rules already set, and aren’t afraid of exercising moderation.

Anything more I can’t really figure out yet.

Alternate Universe Alan makes some good points but so does the one from our reality. 😉

@Jamesworkshop

I like anthropomorphic memes as much as the next channer, but I suspect those Mario gijinka are celebrating something to do with 8chan.

Post deleted because I thought better of it.

I could be missing the point here, but wouldn’t having that much fertilizer improve the aesthetics of a grave quite a lot in the long run?

@ pitshade

🙂

Heh, the only way I could ever make a valid point is if there really is an infinite number of universes where every possibility is played out.

Milo in that Kernel article:

The man’s word is obviously not his bond! 😉

So many accounts have been set up with the sole purpose of abusing Australian feminist writer Clementine Ford. You’d think something more could be done.

I imagine this’ll spark a “debate” among Milo fans that Milo should be able to use the platform, despite his repeated breaking of their terms of service. Because reasons. (No doubt they’ll try to play the homophobia card, because hey, the SJWs do it!)

Let’s see if I can come up with a preemptive metaphor/argument (because I can literally write this shit in advance with these people):

Say I lend someone a pencil (it can be Milo for the purposes of this metaphor if you so choose), on the sole condition that they don’t chew on it.

This person agrees to my single condition, and borrows the pencil.

I have to leave the room for a moment for whatever reason, but say I come back to find that person chewing on my pencil.

Am I within my rights to take my pencil back and make a mental note to never lend that person my pencil or other writing utensils again? Absolutely. That person has demonstrated that they are incapable of following the verbal contract they agreed to. They cannot be trusted with my materials.

Same thing applies here. When you use Twitter’s services (for free, might I add), you’re agreeing to their terms of service. Their rules. One of which is “don’t be a fucking asshole to other people”. Granted, Twitter has been really lax about enforcing this rule, but it’s there. And breaking it is grounds for banning from the service, because you couldn’t follow the rules.

Why? Because you’re using Twitter’s resources. You’re using their website, which they had to pay to have designed and they pay to maintain and host, you’re using their servers, which they also pay to maintain, and you’re using their brand, which they paid to build.

If you’re using their resources and breaking their rules, it’s much the same as chewing on someone else’s pencil after they asked you not to. It’s a violation of boundaries, and it can, and should be, grounds for you to not use that service anymore, because you’ve proved you cannot be trusted with their resources.

On top of that, it makes Twitter look bad. Awful, even.

Just look at how many people in this thread alone say they don’t or won’t use the service because of random shit-heads abusing it to harass others.

@Gert

How hypocritical of him. Or is it one of those double standards I keep hearing of?

@PI

I wonder if they really believe increased moderation would be more expensive than the real loss of potential users. Though on that point I’d need some hard numbers to make a solid statement.

Since trolls favor rapid inundation of their targets, and individual trolls tend to focus on just a few forums and threads, I wonder if there’s a way to physically slow the trolls down without compromising the experience of good faith users? Maybe there are algorithms that can automatically detect anti-social behaviors (X tweets in Y minutes to the same account + certain keywords + user reports) and trip a flag. If the flag is tripped, into moderation they go, and they can’t post again for another hour.

Or instead of moderation, quarantine the messages so they can rage to their heart’s content, but no one but the sender will ever see them. I like ghosting better than banning. It takes awhile for the troll to figure out they’ve been ghosted, and it takes some effort to verify, during which time the victim gets a breather (which they don’t if their attacker gets banned and immediately pops up again with a new [email protected] alias). More importantly, ghosting isolates some of the nastier messages away from the public eye, thus removing one of the big incentives of cyberbullying.

Ghosting doesn’t have to be permanent; it could be used as a mandatory cool-down period. If you’re only allowed to tweet once an hour, are you gonna waste that tweet on “no ur a mangina cuck lololol”?

Make shitposting cost something, so that people will think twice about doing it.

I do follow some good people on twitter. But looking at even just their answer is horribly nasty.

It’s, of course, not limited to SJWs at all. I follow a french lawyer, and he get regulary get abusive tweets just because he dare to talk of why criminal need councel too.

It’s also, and sadly, not a specific problem of Twitter. Litteraly all sites who don’t require account for comment, and a lot of sites who do, are littered with rape culture joke, call to kill all muslims, and people who find that a french politician idea of arming police with rocket launcher against terrorism is a good idea.

Paradoxy said

Hey, look; here’s another one!

I mean, sometimes I’m tempted because it’d be cool to follow, say, Stephen Colbert or tweet in solidarity with Leslie Jones (and follow her) but nah. I agree with Tabby Lavalamp over at Pharyngula;